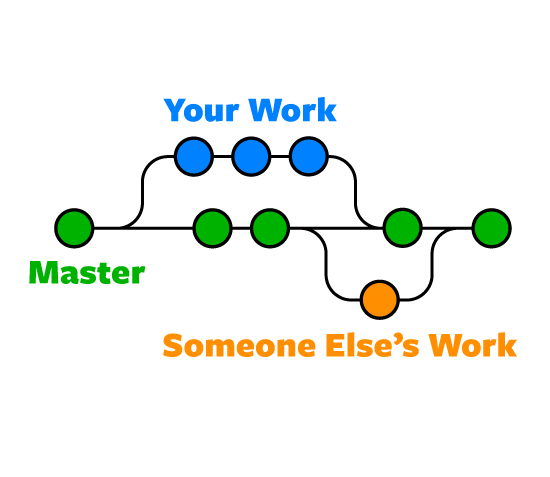

IBM Cloud is one of the sufficient tools that our team used to accomplish the project Missing Map. We are willing to share the experience with IBM Cloud. At the beginning step, tutorials of IBM Cloud give us a brief introduction and guidance to be familiar with the tools. We can start the project and add more ideas easily. There are a large number of IBM tools that we can use. Watson AI is a powerful and effective tool. Especially, Visual Recognition is an important tool that supports this project. We can train the classified images and build the final result. Another tool is Node-red. Node-red is an understandable and useful programming tool. It provides a browser-based flow editor that allows us to write program codes and organize these codes by using lots of nodes in a proper position. For instance, we used it to organize the codes of website.

All elements are at the proper position and connected in sequence. It is easier to add more functions and debug.

Although there are many benefits when we developed the project, some challenges still exist. Hidden functionality perplexes us sometimes. Some instructions are not clearly. When we created Cloud Foundry Applications, there may be some complex problems sometimes. For instance, maye One of our team members cannot create or modify these applications. In addition, others associated applications and services would be created when we create a new Cloud Foundry application.

For example, we created a Node-RED Starter. SDK for Node.js and Cloudant is also created. But no clearly and specifically description and instruction to make users to understand how it works.

Similarly, Storage is needed when we created a new project in IBM Watson Studio. No description and instruction make us confused at the beginning. We use Node-red tool to do our project. But unfortunately, the feature of Node-red project is not currently available in IBM Cloud environment. Another problem is non-informative log.

Although it can record the operators (type, instance, logs, time, actions) and error, the logs are not specifically and clearly. We can view the logs in Kibana, but there is the same problem.

To Read and figure out the logs can cost much time. It is better to add some description to the logs, which is convenience for both developers and others team members to understand the operators. Another problem is the sequence and completeness of the logs. We can check the logs sequence ordered by time. If we chose the newest order, it is not corresponded to the general command line order. In another hand, if we chose the oldest order, we should pull the webpage to the bottom manually when we refresh the webpage every time.

Lack of community resources is another problem. We always found the resources that can be used from websites and dataset. In this process, we often encounter difficulties. Some resources are not corresponded to the tool and they are not used directly. A community resource library is needed for IBM Cloud.

This experience that developed a project with IBM Cloud, we have made great progress. Although there are some challenges in this tool, IBM Cloud would be a the most powerful development tool.